ARTUS

ARTUS - Augmented Reality to Train User Skills - is a project targeted at developing a VR environment for medical skills training. The project was carried by a consortium consisting, among other, of Virtual Proteins, Catharina Hospital – Urology, Maastricht UMC+, and Delft University.

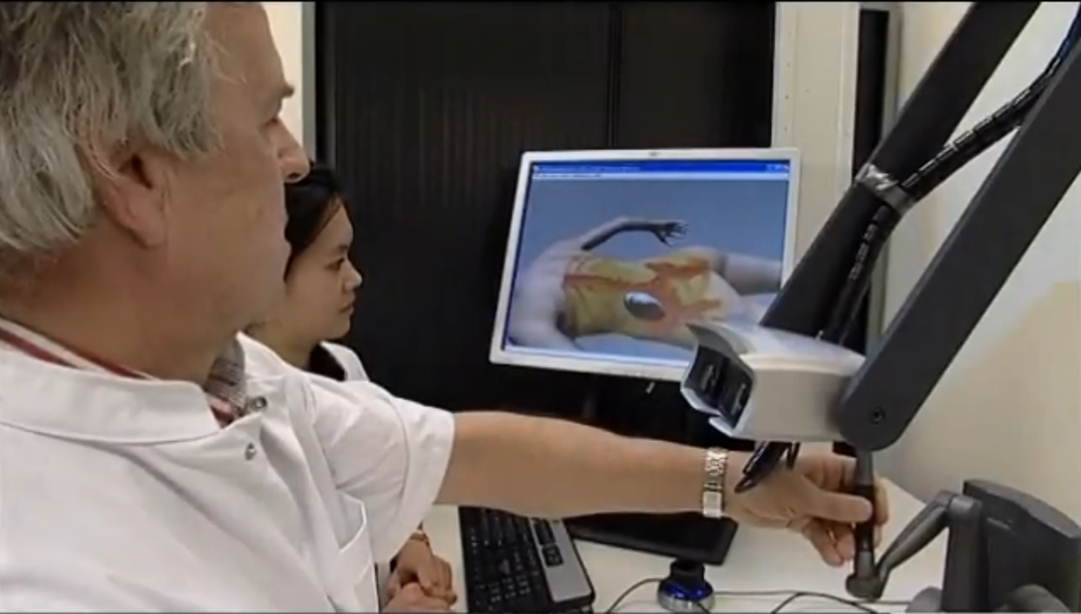

The VR environment consists of a graphical workstation, a desk-mounted stereo display, and a pair of haptic devices. The haptic devices allow the user to sense the linear forces that are the result of interacting with a virtual patient. As a pilot, the system was developed to simulate a nephrostomy, which is an intervention in which a fairly long needle is inserted via the back of the patient into a kidney. The intervention is usually performed to free the kidney of a blockage. The needle tip is faceted and is monitored by an ultrasound imaging device. Both needle and ultrasound probe are controlled by the user through the two haptic devices. Furthermore, the back of the virtual patient can be marked by using one of the haptic devices as a marker. In a real-life procedure, a marker is used to target the entry point of the needle.

Sub-millisecond Performance

The main challenge was performance. Our dynamics refresh rate needed to run at least 1 kHz in order to give proper force feedback to the haptic devices. We ran a high-fidelity dynamic model for both the needle and the ultrasound probe interacting with a patient model composed of 200K+ triangles. Collision detection is computationally by far the most expensive task. The Sensorium collision-detection library managed to attain sub-millisecond performance on a graphical workstation running a quad-core Intel Xeon (Nehalem) CPU.